I’ve used Three.js in two projects so far to handle 3D graphics, but I’ve been referencing it as a monolithic everything bundle. Three.js documentation told me there was a better way:

When installing from npm, you’ll almost always use some sort of bundling tool to combine all of the packages your project requires into a single JavaScript file. While any modern JavaScript bundler can be used with three.js, the most popular choice is webpack.

— Three.js Installation Guide

In my magnetometer test project, I tried to bring in webpack to optimize three.js but aborted after getting disoriented. Now I’m going to sit down and read through its documentation to get a better feel of what it’s all about. Here are my notes from a first look as a beginner.

I have a minor criticism about their home page. The first link is to their “Getting Started” guide, the second link is to their “Concepts” section. I followed the first link to “Getting Started”, which is the first page in their “Guides” section. I got to the end of that first page and saw “Next” link is about Asset Management, the next guide page. Each guide page linked to the next. I quickly got into guide pages who used acronym or terminology that had not yet been explained. Later I realized this was because the terminology was covered in the “Concepts” section. In hindsight I should not have clicked “Next” at the end of “Getting Started” guide. I should have gone back to “Concepts” to learn the lingo, before reading the rest of the guides.

Reading through the guides, I quickly understood that webpack is a very JavaScript-centric system built by people who think JavaScript first. I wanted to learn how to use webpack to integrate my JavaScript code and my HTML markup, but these guide pages didn’t seem to cover my use scenario. Starting right off the bat with the Getting Started guide, they used code to build their markup:

const element = document.createElement('div');

element.innerHTML = _.join(['Hello', 'webpack'], ' ');

document.body.appendChild(element);Wow. Was that really necessary? What about people who wanted to, you know, write HTML in HTML?

<body><div>Hello webpack</div></body>Is that considered quaint and old fashioned nowadays? I didn’t find anything in “Guides” or “Concepts” discussing such integration. I had thought the section on “HtmlWebpackPlugin” would have my answer, but it’s actually a chunk of JavaScript code that erased my index.html (destroying my markup) with a bare-bones index.html that loads the generated JavaScript bundles and have no markup. How does my own markup figure in this picture? Perhaps the documentation authors felt it was too obvious to write down, but I certainly don’t understand how to do it. I feel like an old man yelling at a cloud “tell me how to use my HTML!”

I had thought putting my HTML into the output directory was a basic task, but I failed. And I was dismayed to see “Concepts” and “Guides” pages got deeper and more esoteric from there. I understand webpack is very powerful and can solve many problems with modularizing JavaScript code, but it also requires a certain mindset that I have yet to acquire. Webpack’s bare bones generated index.html looks very similar to the generated index.html of an Angular app (which uses both HTML and webpack) so there must be a way to do this. I just don’t know it yet.

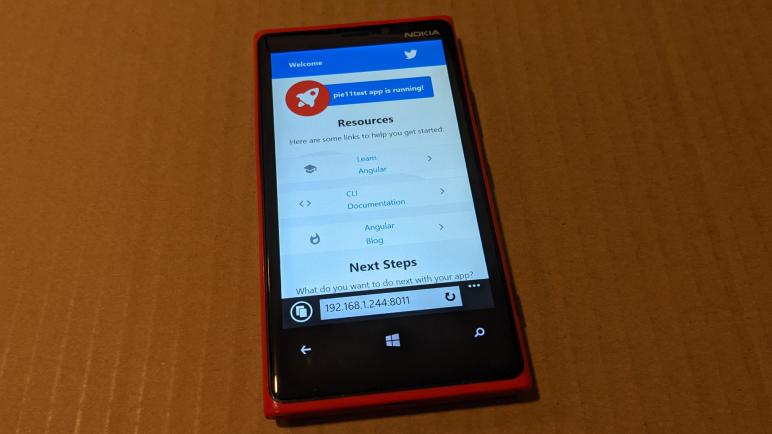

Until I learn what’s going on, for the near future I will use webpack only indirectly: it is already configured and running as part of Angular web app framework’s command line tools suite. I plan to do a few Angular projects to become more familiar with it. And now that I’ve read through webpack concepts, I should recognize pieces of Angular workflow as “Aha, they used webpack to do that.”