A few years ago, I tried out the Google AIY “Voice” and “Vision” kits. They featured very novel hardware but that alone was not enough. Speaking as someone not already well-versed in AI software of the time, there was not enough documentation support to get people like me onboard to do interesting things with that novel hardware. People like me could load the default demo programs, we could make minor modifications to it, but using that hardware for something new required climbing a steep learning curve.

At one point I mounted the box to my Sawppy rover’s instrument mast, indicating my aspirations to use it for rover vision, but I never got much of anywhere.

The software stack also left something to be desired, as it built on top of Raspberry Pi OS but was fragile and easily broken by Raspberry Pi updates. Reviewing my notes, I realized I published my notes on AIY Voice but the information on AIY Vision was still sitting in my “Drafts” section. Oops! Here it is for posterity before I move on.

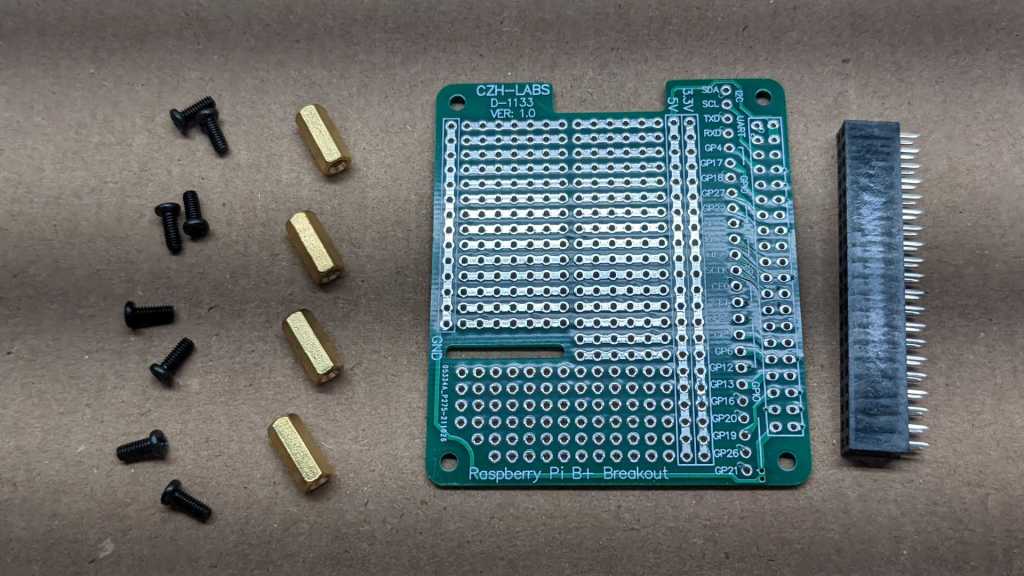

The product packaging is wonderful. This was from the era of Google building retail products from easily recycled cardboard. All parts were laid out and neatly labeled in a cardboard box.

There was no instruction booklet in the box, just a pointer to assembly instructions online. While fairly easy to follow, note that instructions were written for people who already know how to handle bare electronic circuit boards. Handle the circuit boards by the edges and avoid touching components (especially electrical contacts) and such. Complete beginners unaware of basics might ruin their AIY kit.

From a hardware architecture perspective, the key is the AIY Vision bonnet that sat on top of a Raspberry Pi Zero WH. (W = WiFi, H = with presoldered header pins.) In addition to connection with all Pi Zero GPIO pins, it also connects to the Pi camera connector for direct access to camera feed. (Normal data path: Camera –> Pi Zero. AIY Vision data path: Camera –> Vision Bonnet –> Pi.) In addition to the camera, there is a piezo buzzer for auditory feedback, a standalone green LED to indicate camera is live (“Privacy LED”), and a big arcade-style button with embedded LEDs.

Once assembled, we could install and run several visual processing models posted online. If we want to go beyond that, there are instructions on how to compile trained TensorFlow models for hardware accelerated inference by the AIY Vision Bonnet. And if those words don’t mean anything (it didn’t to me when I played with the AIY Vision) then we’re up a creek. That was bad back then, and now that a few years have gone by, things have gotten worse.

- The official Google AIY system images for Raspberry Pi hasn’t been updated since April 2021. And we can’t just take it and pick up more recent updates, because that breaks bonnet functionality.

- The vision bonnet model compiler is only tested to work on Ubuntu 14.04, whose maintenance updates ended in 2019.

- Example Python code is in Python 2, whose support ended January 1st, 2020.

- Example TensorFlow information are for the now-obsolete TensorFlow 1. TensorFlow 2 was a huge breaking change, and it takes a lot of work — not to mention expertise — to migrate from TF1.x to TF2.

All of these factors together tell me the Google AIY Vision bonnet has been left to the dusty paths of history. My unit has only ever ran the default “Joy Detection” demo, and I expect this AIY Vision Bonnet will never run anything else. Thankfully, the rest of the hardware (Raspberry Pi Zero WH, camera, etc.) should have better prospects of finding another use in the future.

While in the process of obtaining proof that a Raspberry Pi 3 is under-powered for certain ROS processing tasks like mapping, I took a little side trip into the world of Raspberry Pi thermal management. Anyone who has pushed the limits of a Raspberry Pi would have seen a thermometer icon in the upper right corner. A quick search finds that it is

While in the process of obtaining proof that a Raspberry Pi 3 is under-powered for certain ROS processing tasks like mapping, I took a little side trip into the world of Raspberry Pi thermal management. Anyone who has pushed the limits of a Raspberry Pi would have seen a thermometer icon in the upper right corner. A quick search finds that it is  This tool appears to be specific to the Raspberry Pi hardware and wraps a collection of tools to query hardware information. Official documentation seems pretty slim, not even an official name. Raspberry Pi forum users hypothesize it stands for “VideoCore General Commands” which is good enough for me.

This tool appears to be specific to the Raspberry Pi hardware and wraps a collection of tools to query hardware information. Official documentation seems pretty slim, not even an official name. Raspberry Pi forum users hypothesize it stands for “VideoCore General Commands” which is good enough for me. The first useful tool is to measure temperature.

The first useful tool is to measure temperature.

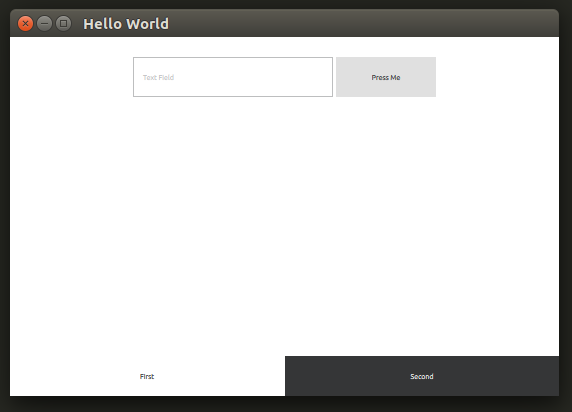

The prime motivation for me to go through Qt licensing documentation and installing Qt Creator IDE was to explore the new UI infrastructure introduced in Qt 5 under the umbrella of “

The prime motivation for me to go through Qt licensing documentation and installing Qt Creator IDE was to explore the new UI infrastructure introduced in Qt 5 under the umbrella of “

The solution: a timer. In a Windows application, the programmer can use the operating system timer and do their “after waiting a bit” tasks in response to the

The solution: a timer. In a Windows application, the programmer can use the operating system timer and do their “after waiting a bit” tasks in response to the