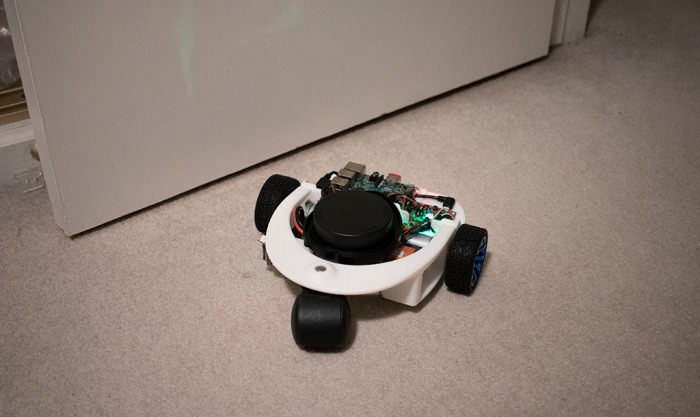

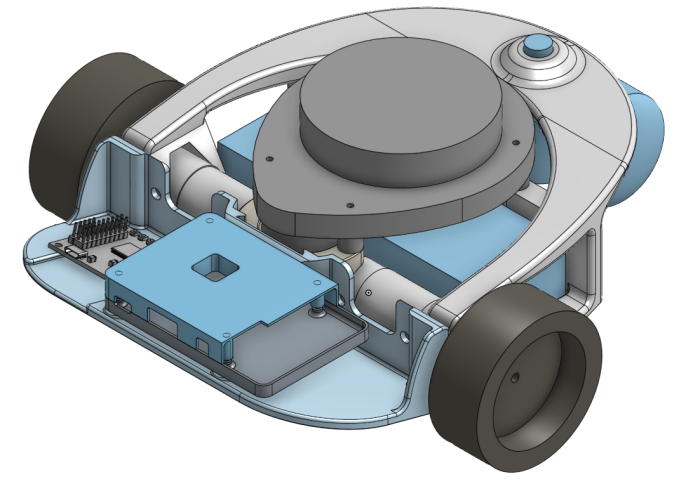

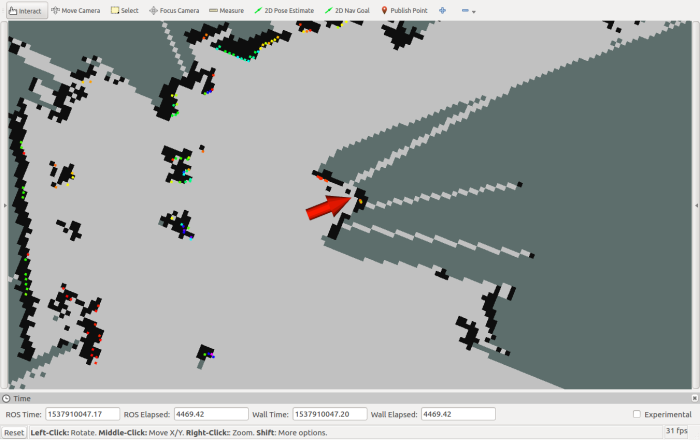

I started the Phoebe project with the goal of building something to apply what I’ve learned about ROS. Get some hands-on experience, learning the ropes. Now that Phoebe can map and autonomously navigate its environment, it is a good place to pause and evaluate potential paths forward. (Also: I have other demands on my time so I need to pause my Phoebe work anyway… and now is a great time.)

I started the Phoebe project with the goal of building something to apply what I’ve learned about ROS. Get some hands-on experience, learning the ropes. Now that Phoebe can map and autonomously navigate its environment, it is a good place to pause and evaluate potential paths forward. (Also: I have other demands on my time so I need to pause my Phoebe work anyway… and now is a great time.)

Option #1: Better Refinement

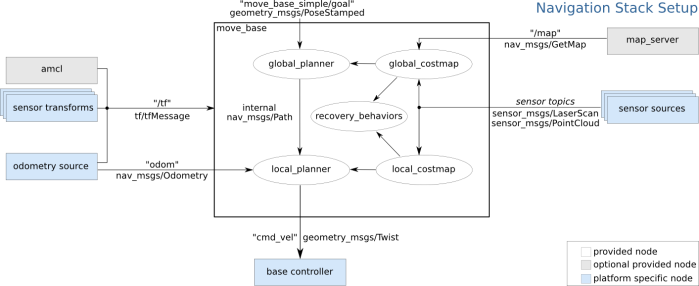

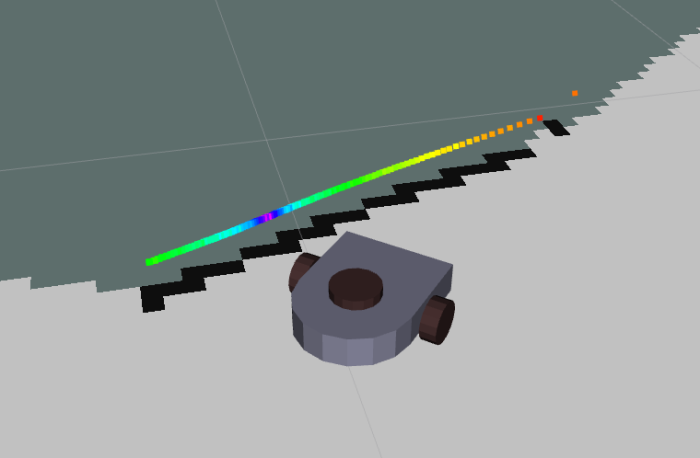

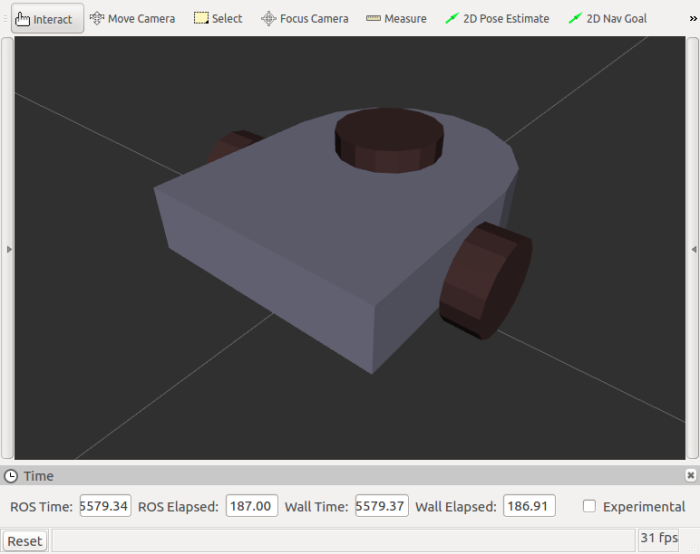

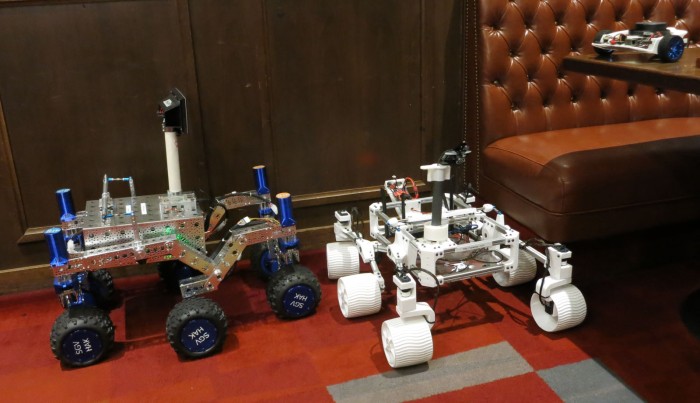

Phoebe can map surroundings then, using that map, navigate that environment. This level of functionality is on parity with the baseline functionality of TurtleBot 3. Though neither the mapping nor the navigation is quite as polished as performed by TurtleBot built by people who know what they are doing. For that, Phoebe’s ROS modules need tuning of their parameters to improve performance. There are also small bugs hiding in the system that need to be rooted out. I’m sure the ~100ms timing difference mystery is only the tip of the iceberg.

Risk: This is “the hard part” of not just building a robot, but building a good robot. And I know myself. Without a clear goal and visible progress towards that goal, I’m liable to get distracted or discouraged, trailing off and never really accomplishing.

Option #2: More ROS Functionality

I had been disappointed that the SLAM and navigation tutorials I’ve seen to date require a human to direct robot exploration. I had thought automated exploration would be part of SLAM but I was wrong. Thanks to helpful comments by Hackaday.io user Humpelstilzchen (who is building a pretty cool ROS robot too) I’ve now learned autonomous exploration is built on top of SLAM and Navigation.

So now that Phoebe can do SLAM and can navigate, adding one of the autonomous exploration modules would be the obvious next step.

Risk: It’s another ROS learning curve to climb.

Option #3: More Phoebe Functionality

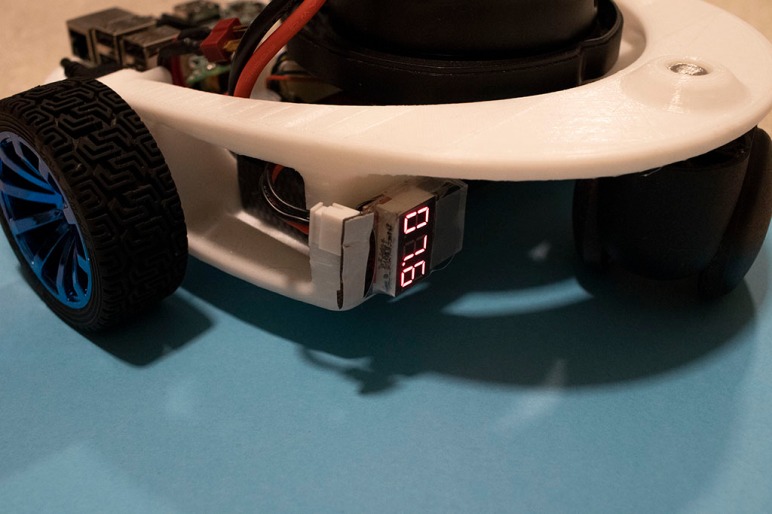

Phoebe has wheel encoders and a LIDAR as input, and it might be interesting to add more. Ideas have included:

- Obstacle detection to augment LIDAR, such as

- Ultrasound distance sensor.

- Infrared distance sensor (must avoid interference with LIDAR).

- Bumpers with microswitches to detect collision.

- IMU (inertial measurement unit).

- Raspberry Pi camera or other video feed.

Risk: Over-complicating Phoebe, which was always intended to be a minimal-cost baseline entry into the world of ROS following the footstep of ROS TurtleBot.

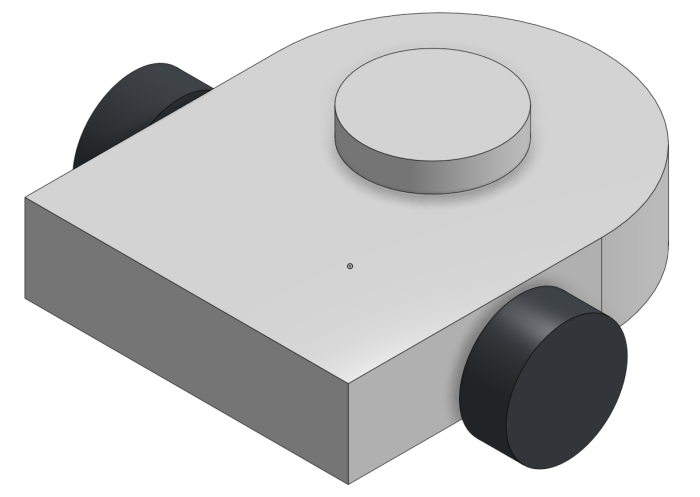

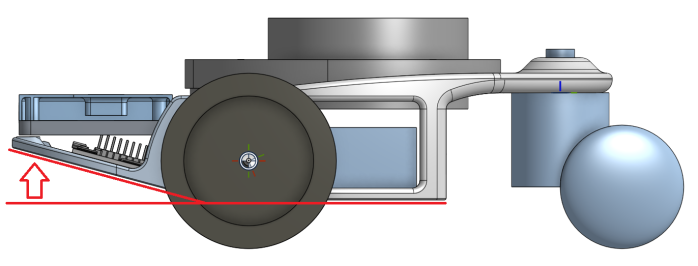

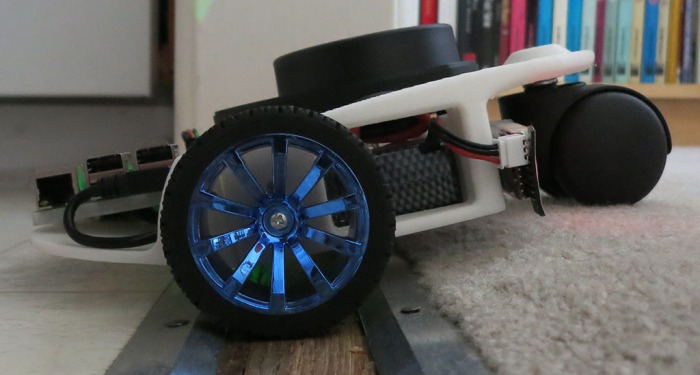

Options 1 and 2 take place strictly in software, which means mechanical chassis will remain untouched.

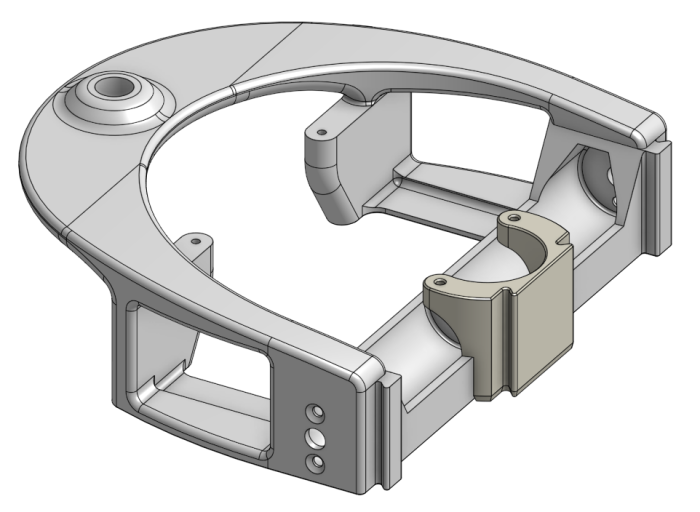

Option 3 changes Phoebe hardware, and that would start deviating from TurtleBot. There’s value in being TurtleBot-compatible and hence value in taking a snapshot at this point in time.

Given the above review, I declare the mechanical construction project of Phoebe the TurtleBot complete for version 1.0. As part of this, I’ve also updated the README file on Phoebe’s Github repository to describe content. Because I know I’ll start forgetting!

I started the Phoebe project with the goal of building something to apply what I’ve learned about ROS. Get some hands-on experience, learning the ropes. Now that Phoebe can map and autonomously navigate its environment, it is a good place to pause and evaluate potential paths forward. (Also: I have other demands on my time so I need to pause my Phoebe work anyway… and now is a great time.)

I started the Phoebe project with the goal of building something to apply what I’ve learned about ROS. Get some hands-on experience, learning the ropes. Now that Phoebe can map and autonomously navigate its environment, it is a good place to pause and evaluate potential paths forward. (Also: I have other demands on my time so I need to pause my Phoebe work anyway… and now is a great time.)

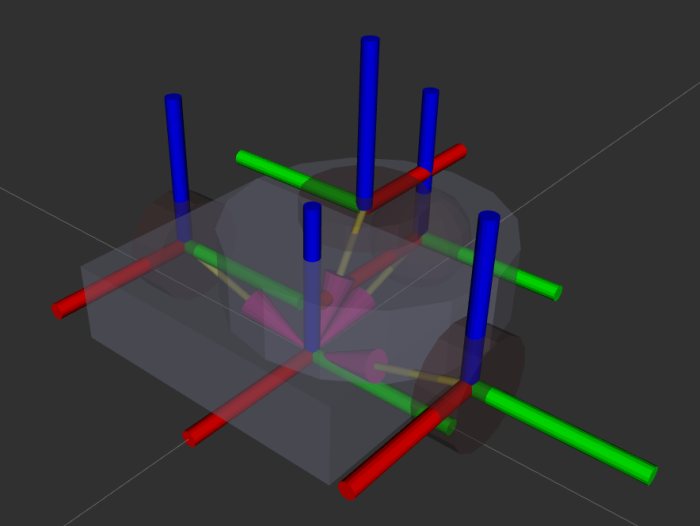

Section 1 “Robot Setup” of

Section 1 “Robot Setup” of