My collection of old small SSDs played a game of musical chairs to free up a drive for my TrueNAS replication machine, the process of which was an opportunity for hands-on time with some Linux disk administration tools. Now that I have my system drive up and running on Ubuntu Server 22.04 LTS, it’s time to wade into the land of ZFS again. It’s been long enough that I had to refer to documentation to rediscover what I need to do, so I’m taking down these notes for if when I need to do it again.

Installation

ZFS tools are not installed by default on Ubuntu 22.04. There seems to be two separate packages for ZFS. I don’t understand the tradeoffs between those two options, I chose to sudo apt install zfsutils-linux because that’s what Ubuntu’s ZFS tutorial used.

Creation

Since my drive was already setup to be a replication storage drive, I didn’t have to create a new ZFS pool from scratch. If I did, though, here are the steps (excerpts from the Ubuntu tutorial linked above):

- Either “fdisk -l” or “lsblk” to list all the storage devices attached to the machine.

- Find the target device name (example: /dev/sdb) and choose a pool name (example: myzfs)

- “zpool create myzfs /dev/sdb” would create a new storage pool with a single device. Many ZFS advantages require multiple disks, but for TrueNAS replication I just output to a single drive.

Once a pool exists, we need to create our first dataset on that pool.

- “zfs create myzfs/myset” to create a dataset “myset” on pool “myzfs”

- Optional: “zfs set compress=lz4 myzfs/mydataset” to enable LZ4 compression on specified dataset.

Maintenance

- “zpool scrub myzfs” to check integrity of data on disk. With a single drive it wouldn’t be possible to automatically repair any errors, but at least we would know that problems exist.

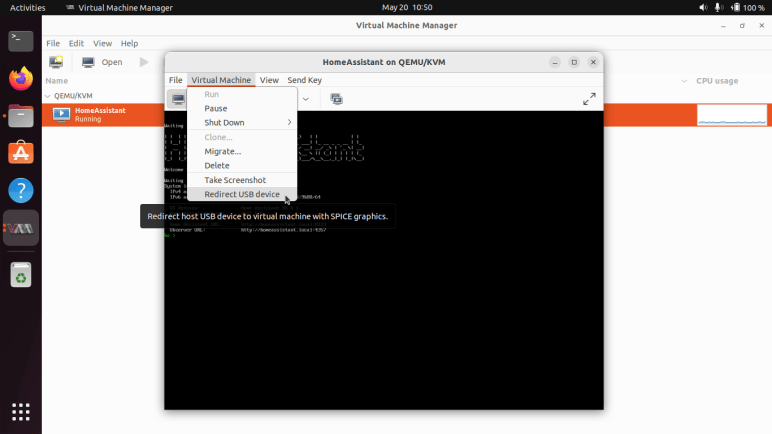

- “zpool export myzfs” is the closest thing I found to “ejecting” a ZFS pool. Ideally, we do this before we move a pool to another machine.

- “zpool import myzfs” brings an existing ZFS pool onto the system. Ideally this pool had been “export”-ed from the previous machine, but as I found out when my USB enclosure died, this was not strictly required. I was able to import it into my new replication machine. (I don’t know what risks I took when I failed to export.)

- “zfs list -t snapshot” to show all ZFS snapshots on record.

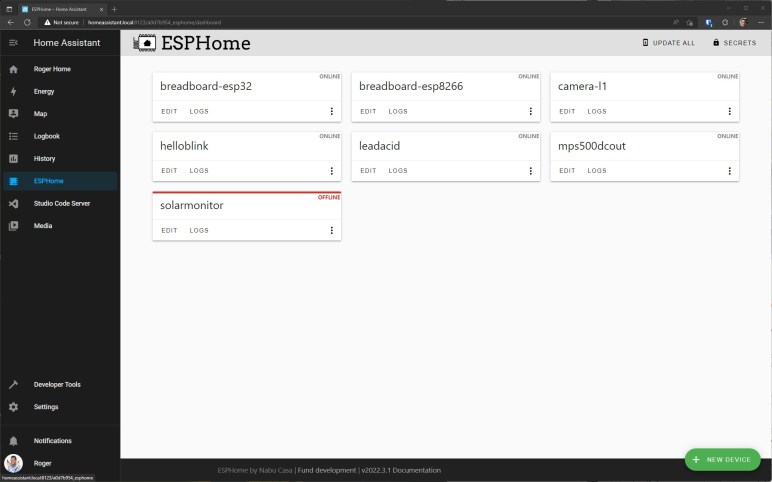

TrueNAS Replication

The big unknown for me is figuring out permissions for a non-root replication user. So far, I’ve only had luck doing this on root account of the replication target, which is bad for many reasons. But every time I tried to use a non-root account, replication fails with error umount: only root can use "--types" option

- On TrueNAS: System/SSH Keypairs. “Add” to generate a new pair of private/public key. Copy the public key.

- On replication target: add that public key to /root/.ssh/authorized_keys

- On TrueNAS: System/SSH Connections. “Add” to create a new connection. Enter a name and IP address, and select the keypair generated earlier. Click “Discover Remote Host Key” which is our first test to see if SSH is setup correctly.

- On TrueNAS: Tasks/Replication Tasks. “Add” to create a replication job using the newly created SSH connection to push replication data to the zfs dataset we just created.

Monitor Disk Activity

The problem with an automated task going directly to root is that I couldn’t tell what (if anything) was happening. There are several Linux tools to monitor disk activity. I first tried “iotop” but unhappy with the fact it required admin privileges and that is not considered a bug. (“Please stop opening bugs on this.”) Looking for an alternative, I found this list and decided dstat was the best fit for my needs. It is not installed on Ubuntu Server by default, but I could run sudo apt install pcp to install, followed by dstat -cd --disk-util --disk-tps to see activity level of all disks.