We’ve found our way to the source code for the simple turtlebot3_drive node. It’s a simple starting point to explore writing code in ROS that’ll be worth returning to in the future. In the meantime I keep looking at the other fun stuff available in ROS… like making the robot a little bit smarter. Enter the TurtleBot SLAM (simultaneous location and mapping) demonstration outlined in the manual.

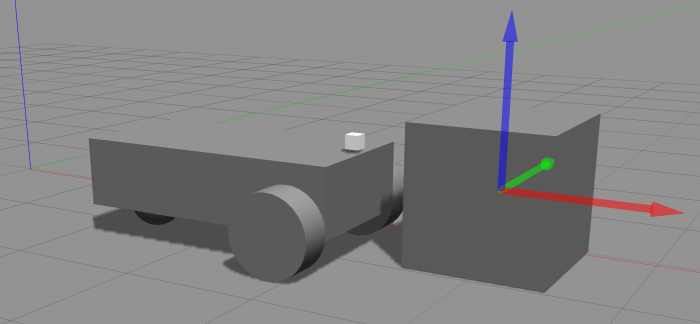

Like all of the TurtleBot3 demo code from the e-Manual, we start by launching the Gazebo simulation environment.

roslaunch turtlebot3_gazebo turtlebot3_world.launch

Then we can launch the node to run one of several different algorithms. Each have strengths and weaknesses, this one has the strength of “it’s what’s in the manual” for a starting point.

roslaunch turtlebot3_slam turtlebot3_slam.launch slam_methods:=gmapping

Note: If this node failed to launch with the error ERROR: cannot launch node of type [gmapping/slam_gmapping]: gmapping it means the required module has not been installed. Install (on Ubuntu) with sudo apt install ros-kinetic-slam-gmapping.

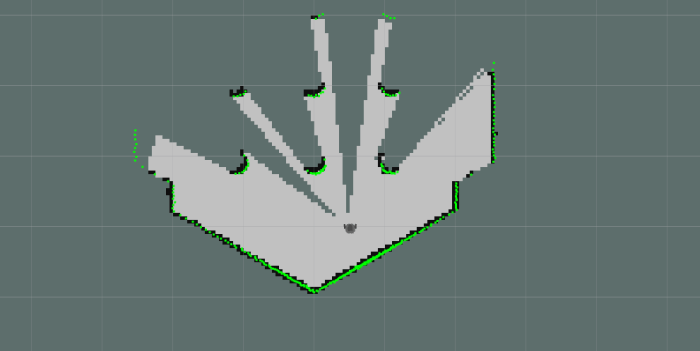

If successful, this will launch RViz and we can see the robot’s map drawn using what it can detect from its initial position.

To fill out the rest of the map, our virtual TurtleBot needs to explore its space. The manual suggests running the ‘turtlebot3_teleop‘ module so we can use our keyboard to drive TurtleBot around turtle world. But I think it’s more fun to watch the robot map its own world, so let’s launch turtlebot3_drive instead.

roslaunch turtlebot3_gazebo turtlebot3_simulation.launch

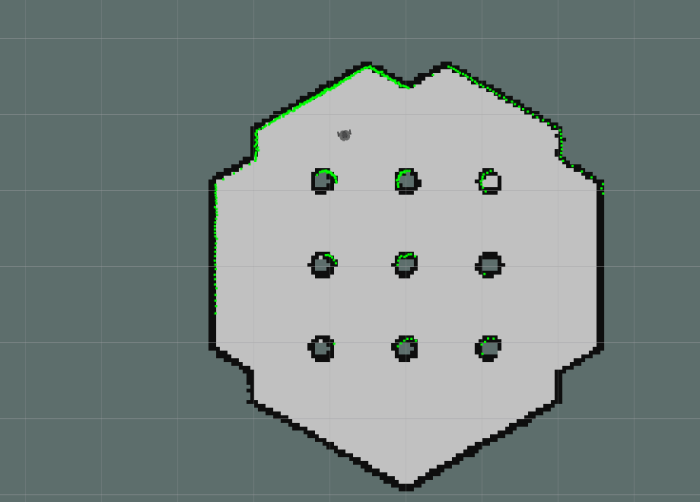

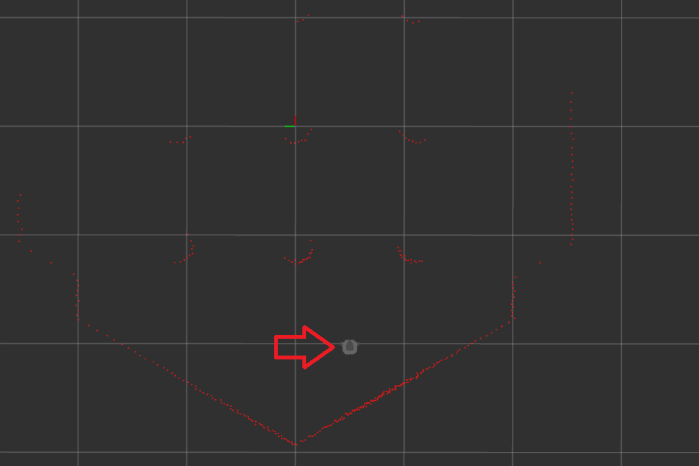

Using this simple self-exploration mode the turtle world will be mapped out eventually. How long this will take depends on luck. One interesting observation is that there’s no explicit randomness in the turtlebot3_drive source code, but because the Gazebo simulation environment inserts randomness in the data to simulate unpredictability of real sensors, turtlebot3_drive ends up being a random walk.

Once our robot has completed mapping its world, we can save it for the navigation demo.

rosrun map_server map_saver -f ~/map

More details on how to tune SLAM algorithm parameters are in the SLAM chapter of the manual, which is mainly focused on running the real robot rather than simulation but most of the points still apply.

After realizing my beginner’s mistake of

After realizing my beginner’s mistake of  ROS gives creative names to each release, but the key part is that the names are in alphabetical order. When I looked into the ROS nodes to help control Dynamixel serial bus servos, I was confused as to their support of ROS distributions.

ROS gives creative names to each release, but the key part is that the names are in alphabetical order. When I looked into the ROS nodes to help control Dynamixel serial bus servos, I was confused as to their support of ROS distributions. Back in January of this year I joined a team of pre-release beta testers for a project out of nearby Jet Propulsion Laboratory (JPL). While not exactly a state secret, we were asked not to overtly broadcast or advertise the project until after JPL’s own publicity office started doing so. This

Back in January of this year I joined a team of pre-release beta testers for a project out of nearby Jet Propulsion Laboratory (JPL). While not exactly a state secret, we were asked not to overtly broadcast or advertise the project until after JPL’s own publicity office started doing so. This  Reading over the list of links on

Reading over the list of links on