Along with the “keyboard is broken” laptop, I was also asked to look into a mid-tower PC that would no longer turn on. I grabbed a power supply I had on hand and plugged it into the motherboard, which happily powered up. Diagnosis: dead power supply. I bought a new power supply for the PC to bring it back to life, now it’s time to take apart the dead power supply to see if I can find anything interesting. Could it be as easy as a popped circuit breaker or a blown fuse?

According to the label, the manufacturer has the impossibly unsearchable name of “High Power”. Fortunately, the model number HP1-J600GD-F12S is specific enough to find a product page on the manufacturer’s site. The exact model string also returned a hit for a power supply under Newegg’s house brand Rosewill, implying the same device was sold under Newegg’s own name. Amusingly, Newegg’s Rosewill product listing included pictures with “High Power” embossed on the side.

If there is a user-replaceable fuse or a user-accessible circuit breaker, they should be adjacent to the power socket and switch. I saw nothing promising at the expected location or anywhere else along the exterior.

Which meant it was time to void the warranty.

Exterior enclosure consisted of two pieces of sheet metal each bent into a U shape and held together with four fasteners. Once pried apart, I had to cut a few more zip-ties holding the cooling fan power wire in place before I could unplug it to get a clear view at the interior. Everything looks clean. In fact, it looked too clean — either this computer hadn’t been used very much before it blew, or it lived in a location with good air filtration to remove dust.

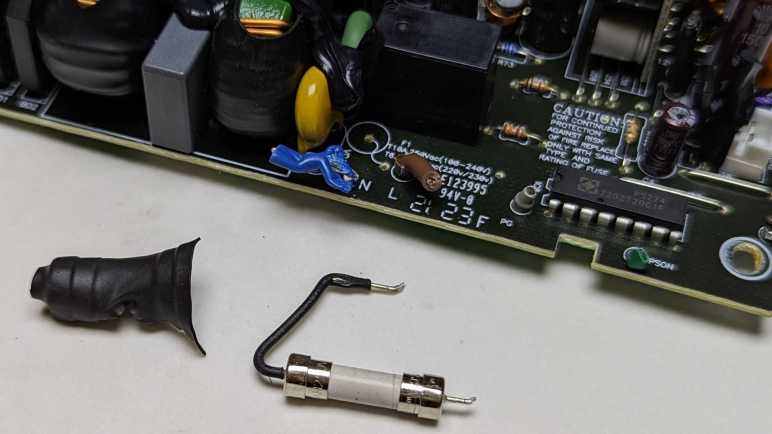

Still on the hunt for a circuit breaker or a fuse, I found the standard boilerplate fuse replacement warning. Usually, this kind of language would be printed immediately adjacent to a user-serviceable fuse. But getting here required breaking the warranty seal and none of the adjacent components looked like a fuse to me.

Disassembly continued until I could see the circuit traces at the bottom of the board. Getting here required some destructive cutting of wires, so there’s no bringing this thing back online. Perhaps someone with better skills could get here nondestructively but I lacked the skill or the motivation to figure things out nicely. I saw no obviously damaged components or traces on this side, either. But more importantly, now I could see that 120V AC line voltage input wire is connected to a single wire. That must lead to the fuse.

Turning the board back over, I see the line voltage input wire (brown) connected to a black wire that led to a cylinder covered in heat-shrink tubing and held in place by black epoxy. The shape of that cylinder is consistent with a fuse. The heat-shrink and epoxy meant this is really not intended to be easily replaced.

Once unsoldered, I could see the electronic schematic symbol for fuse printed on the circuit board. The “F” in its designation “F1” is consistent with “Fuse”, as do the amperage/voltage ratings listed below. This fuse is a few centimeters away from the caution message I noticed earlier, which was farther away than I had expected. My multi-meter showed no continuity across this device, so indeed the fuse has blown. I cut off the heat-shrink hoping to see a burnt filament inside a glass tube, but this fuse didn’t use a glass tube.

I started this teardown wondering if it was “as easy as a popped circuit breaker or a blown fuse”. While it was indeed a blown fuse, a nondestructive replacement would not have been easy. I don’t know why the fuse on this device was designed to be so difficult to access and replace, but I appreciate it is far better to blow a fuse than for a failing power supply to start a fire.