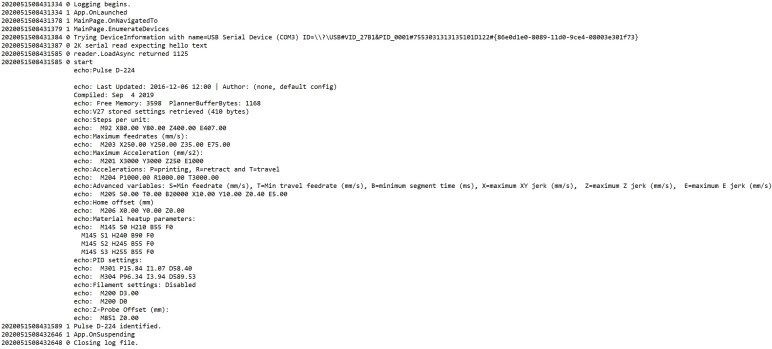

It was fun to get a taste of Angular completely risk free, without installing anything on my computer courtesy of StackBlitz. I saw enough to believe Angular is worth additional exploration, so it’s time to go ahead and install those developer tools. For this run, I decided to set it up on my Macbook Air running MacOS X Catalina. Following installation directions, Angular CLI installation failed with:

warn checkPermissions Missing write access to /usr/local/lib/node_modulesI probably could get past this problem with sudo, but I looked around for a better way. According to this StackOverflow thread I need to take ownership of a few directories important to Node.JS. Rather than following the list blindly, I only took ownership as needed. Starting with /usr/local/lib/node_modules because that was the specifically named in that error message. After that, I saw a different error:

┌──────────────────────────────────────────────────────────┐

│ npm update check failed │

│ Try running with sudo or get access │

│ to the local update config store via │

│ sudo chown -R $USER:$(id -gn $USER) /Users/roger/.config │

└──────────────────────────────────────────────────────────┘So I grabbed ownership of .config, and all seems sorted on the permissions front. With the CLI tools installed, I tried to create a new app. It appeared to mostly work but towards the end I saw an error:

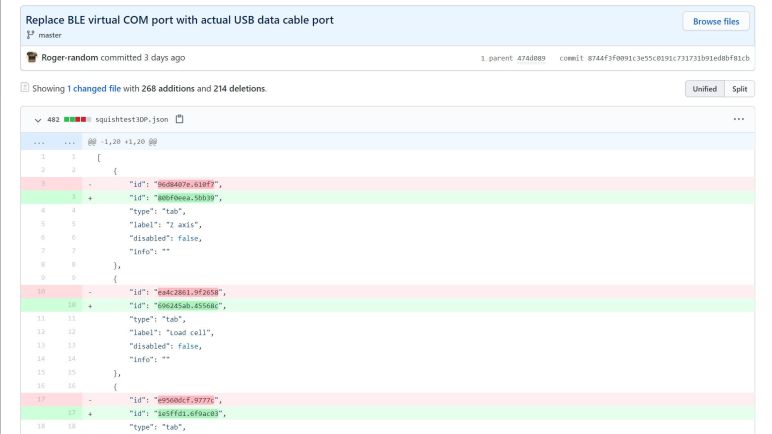

xcrun: error: invalid active developer path (/Library/Developer/CommandLineTools), missing xcrun at: /Library/Developer/CommandLineTools/usr/bin/xcrunI have no idea what xcrun had to do with anything. Searching around for what this message might mean, I found a hit on StackExchange explaining this is a very cryptic way to tell me I haven’t yet installed developer tools on my Macbook. In this specific case, git has not yet been installed. I don’t know what xcrun has to do with git, but it appears to be involved in Angular CLI setting up a new project. I guess it calls git init as part of the project creation? In any case, running xcode-select --install got me started. Once git was installed, I configured my required global settings (name and email.) Once that was done I could successfully run the new Angular creation script, whose output at the end confirms it initializes the project as a git repository.

✔ Packages installed successfully.

Successfully initialized git.Running ng serve allowed me to load up the boilerplate default Angular application screen in my browser, confirming project creation success and green light to proceed setting up for the tutorial.