When I was given a FormLabs Form 1+ resin 3D printer, I had dreams of high detail resin printing. Alas, I eventually found that printer to be broken beyond the point of practical repair. It was free and worth every penny as I enjoyed taking it apart. But it put the thought of resin printing in my mind. So, when Monoprice cleared out one of their resin printers (item number 30994) I grabbed one. I could never resist a clearance on something I wished for, like what I did earlier for a graphic drawing display.

As far as support is concerned, I understand this device to be a rebranded Wanhao Duplicator 7 Plus. There are a few changes, such as the lack of orange acrylic windows into the print volume. For the price, I can probably deal with it.

The product was shipped directly in its brown product box. At least it didn’t have fancy graphics, which reduced its attraction to thieves.

At the top of the box is the manual (good), FEP film (eh?) and a “please don’t make us restock” flyer.

Under them is a handle. Should I lift it? Looks very tempting.

I decided not to lift the handle and pulled out top foam block instead.

As it turned out, lifting the handle probably would have been fine. Because now the handle is all I have to pull on.

It appears to the print volume lid, with a red strip around its base. Later I determined the red strip was not packing material, it is intended to seal against light.

Once that lid was lifted, I had no obvious handles to pull on.

I carefully set the box on its side and slide out whatever’s in the bag.

The bag contained everything else, as there was only a block of packing foam left in the box once the bag was removed.

Everything else is packed within what will eventually be the print volume.

They were held by these very beefy zip ties.

At the top of the stack is the build plate. Looks like there is a bit of manufacturing residue that I have to clean off before I use it.

A plastic bag held a few large accessories.

A cardboard held some of the remaining accessories.

Contents of the box. I see a small bottle of starter resin, a container (for leftover resin?) A few gloves, fasteners, and a USB flash drive presumably holding software.

Under that cardboard box is the resin build tank.

A sheet of FEP film was already installed in the frame. The bag at the top of the box is apparently an extra sheet.

Initially I was not sure if tape around the screen is intended to be peeled off. I left it on and that was a good thing. Later research found that it holds the screen in place and is a factory-applied version of what some early Duplicator 7 owners did manually to resolve a design flaw.

The manual hints that the box used to include additional accessories. Playing with image contrast, I can read them to be “HDMI Cable” (why would there be one?) “USB Cable” (I have plenty) and “Print removal tool” (But there is a spatula?)

Icon for slicing operation is a slice of cake. Looking almost exactly like the icon in Portal. I hope this is not a lie.

Here’s everything in the box laid out on the floor.

Here’s a closeup of the base of Z-axis. It uses an ACME leadscrew and optical endstop as the Form 1+ did, but here we see a shaft coupler between the motor and leadscrew. The Form 1+ had a motor whose output shaft is the leadscrew, eliminating issues introduced by a shaft coupler at higher manufacturing cost. This printer also used the precision ground shafts for guidance instead of a linear bearing carriage as used by Form 1+. Another tradeoff for increased precision at higher cost.

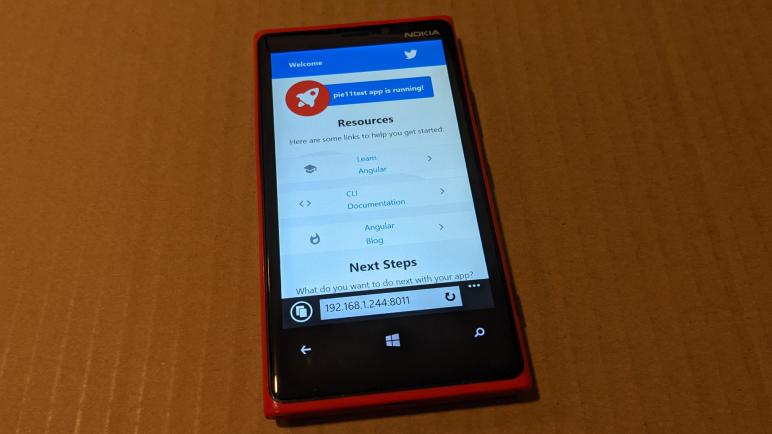

Plugged it in and turned it on: It lives!

Here is the machine status screen. Looks like the printer itself is up and running, but this is just the beginning. A resin printer needs more supporting equipment before I can start printing (good) parts.